Change detector

Overview

Picterra provides two pathways for change detection:

Change detection model

Comparative analysis

Change detection model

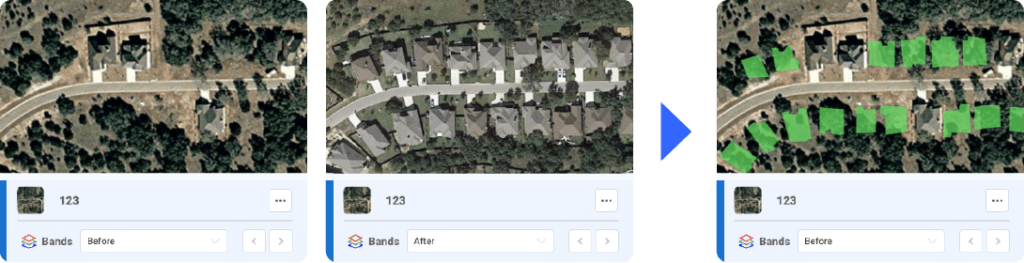

This approach allows you to create a dedicated change detection model to identify changes between two images taken at different times. For example, it can detect appearing or disappearing objects or land cover changes. You can focus on specific changes, like a building’s construction phase, and train a machine learning model based on these annotations. This method is more flexible and efficient, as it uses a single model to analyze a pair of images.

How it works

Change detection process

Training a change detector

Upload your imagery

Create a change detector

Select a pair of images

Annotate changes

When annotating, pay particular attention to ensuring the left-side image is always more recent than the right-side one. Detectors will not learn the same thing when shown an appearing or disappearing object. To avoid mistakes, ensure your images’ capture dates are accurate; you can adjust these in the Image Details dialog.

Please note, that you can annotate as many pairs of images as you want and your detector will be trained considering all of the annotations in the detector.

Run a change detector

Training a change detector requires annotations drawn on pairs of images, and running change detectors also requires a pair of images. The output of a change detector is a vector layer that represents the instances of the changes it was trained to recognize.

Best practices: Be sure to set up detection areas to help keep the detection time low, considering the increased data processing required by using two images instead of one.

Select your images

View and manage your results

Activate/Deactivate split view

Change detection features

Remote imagery

Change detectors can be trained and run on remote imagery. This follows the regular process for remote imagery servers. The only difference is that you will need one server for each capture date:

- Make sure you have setup at least 2 remote imagery servers in the Imagery Servers list (or more: there should be one for each date of interest)

- Import your AOI (once for each date), either in streaming mode or in download mode (streaming will be quicker!)

- Add the imported remote imagery to your detector, then annotate changes as if those were regular images.

When done annotating and training your detector, you can run it on your imported images. This is no different to running a change detector.

Comparative analysis

This approach works best when image detections are accurate, and if it involves detections of objects disappearing, appearing, or moving.

1. Detect in “before” and “after” images

To compare two images, we detect objects/textures in both the “before” and “after” images and compare them. If an object disappears in the second image, it counts as a disappearance, and if an object appears in the second image, it counts as an appearance. For moving objects, we determine if something has moved based on the overlap between the locations of the two objects. Note that this means the object is shifting / rotating slightly such that there is still overlap between the detection on the “before” and “after” images so that we can still match the object (If it moves too much it could look like one object appearing and another disappearing)

2. Compare detections

In a second step, the detections from the “before” and “after” image are used to identify disappearances, appearances or moving objects. This identification happens by feeding the “before” and “after” image to an Advanced Tool. Note that the Advanced Tool for comparative change detection analysis is not available by default, but can be enabled on your account on request.

3. Change alerts dashboard (optional)

Contact your customer success representative to discuss options for a custom dashboard which allows manual review of automatically detected changes.

Change detection

Picterra provides two pathways for change detection:

Change detection model

Comparative analysis

Comparative analysis

This approach works best when image detections are accurate, and if it involves detections of objects disappearing, appearing, or moving.

1. Detect in “before” and “after” images

To compare two images, we detect objects/textures in both the “before” and “after” images and compare them. If an object disappears in the second image, it counts as a disappearance, and if an object appears in the second image, it counts as an appearance. For moving objects, we determine if something has moved based on the overlap between the locations of the two objects. Note that this means the object is shifting / rotating slightly such that there is still overlap between the detection on the “before” and “after” images so that we can still match the object (If it moves too much it could look like one object appearing and another disappearing)

2. Compare detections

In a second step, the detections from the “before” and “after” image are used to identify disappearances, appearances or moving objects. This identification happens by feeding the “before” and “after” image to an Advanced Tool. Note that the Advanced Tool for comparative change detection analysis is not available by default, but can be enabled on your account on request.

3. Change alerts dashboard (optional)

Contact your customer success representative to discuss options for a custom dashboard which allows manual review of automatically detected changes.

Change detection model

1. Combine “before” and “after” images

Use the Format images for change detection Advanced Tool to create a “change” image that can be used to train your detector. This will require two images, which should cover the same geographical location and have the same number of bands.

Contact your customer success representative to enable this Advanced tool if you don’t have it available yet.

2. Set up band settings

Open the added image and hit the Edit bands button. This will open the multispectral dialog in which you should edit the band settings for each image.

A typical change detection setup would have 2 band settings per image:

- “before” using bands 1, 2 and 3

- “after” using bands 4, 5 and 6

After doing this for every training image, you are ready for the next steps.

3. Labelling & Training

The annotation process is similar to the usual detector training flow. Note however, that only changes should be annotated.

For instance, if you are interested in buildings that were built between two dates, do not annotate all buildings, but only those buildings that aren’t there when viewing the “before” bands and appear when viewing the “after” bands. The model will learn to identify differences that are similar to the ones you annotate.

Band settings display options

There are multiple ways to configure which bands are displayed:

Enabling spit screen

Band selection

4. Running the detector

Once the change detector has been trained, it can be run on more images just like any other detector, see Generate Results section for more information.